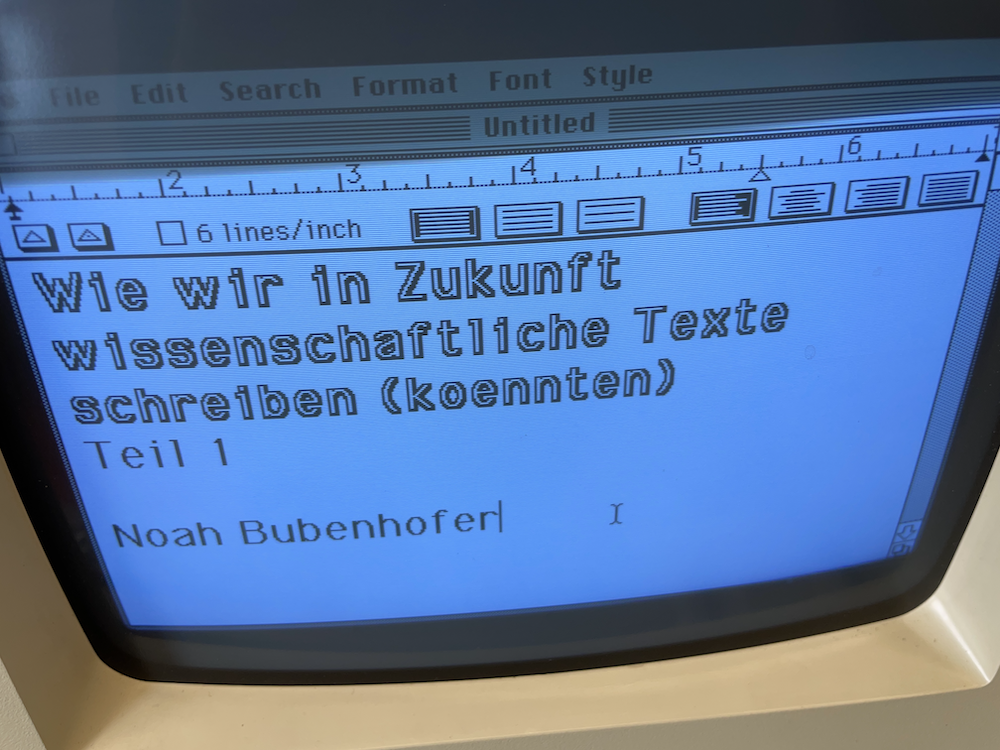

Radical changes in the interaction of machine and human in writing texts are underway. Artificial intelligence can automatically compose, translate and edit texts. Yet we still plague ourselves with word processors stuck between typewriter and code editor. And in the sciences, there are still arguments about what the right language of publication is, how to cite, and how to detect plagiarism. In the near future, scientific writing may have changed so much that these questions will become obsolete. This is part 1 of a series.

(This is the english translation of the original article: Wie wir in Zukunft wissenschaftliche Texte schreiben (könnten) – Teil 1)

Theses

When it comes to academic writing, which I include research, there could be big changes in the coming years. This has something to do with the new possibilities of large language models being used for Artificial Intelligence, as is visible right now with ChatGPT, for example. But it also has to do with the fact that there is finally an opportunity to stop thinking of writing with computers as better writing with typewriters, and start thinking about it in a whole new way.

Here are the five theses I want to write about below:

- Writing and research support by AI: Artificial intelligence systems for generating texts will not produce meaningful scientific texts, but will be a huge help to us in writing and research.

- The question of the right publication language is obsolete: I write my scientific text in the language in which I prefer to write. The readers themselves decide in which language they want to read it. (Cf. Part 2.)

- Institutional and disciplinary guidelines for specific bibliographic formats are superfluous: Citation can finally be considered completely detached from formalities.

- No more plagiarism: Because citation and the adoption of ideas will be solved differently from a technical point of view anyway, and research processes will be different with AI support, there will be no more plagiarism in the true sense of the word.

- Other machines and programs: The machines and programs we use to write scientific texts will have to change radically. Word & Co, OpenOffice but also LaTeX or collaborative software like Google Docs, Onlyoffice etc. are completely unsuitable tools. The computer systems we work with (laptops, external screens, keyboards, tablets) are also unsuitable.

Writing and research support through AI

In his book „Textverarbeitung“ (Text Processing, Till Heilmann distinguishes three types of writing with and for the computer: writing for the computer but not with the computer (e.g., programming with punch cards), writing for the computer and with the computer (programming in an editor on the computer), and writing with the computer but not for the computer: classical word processing.

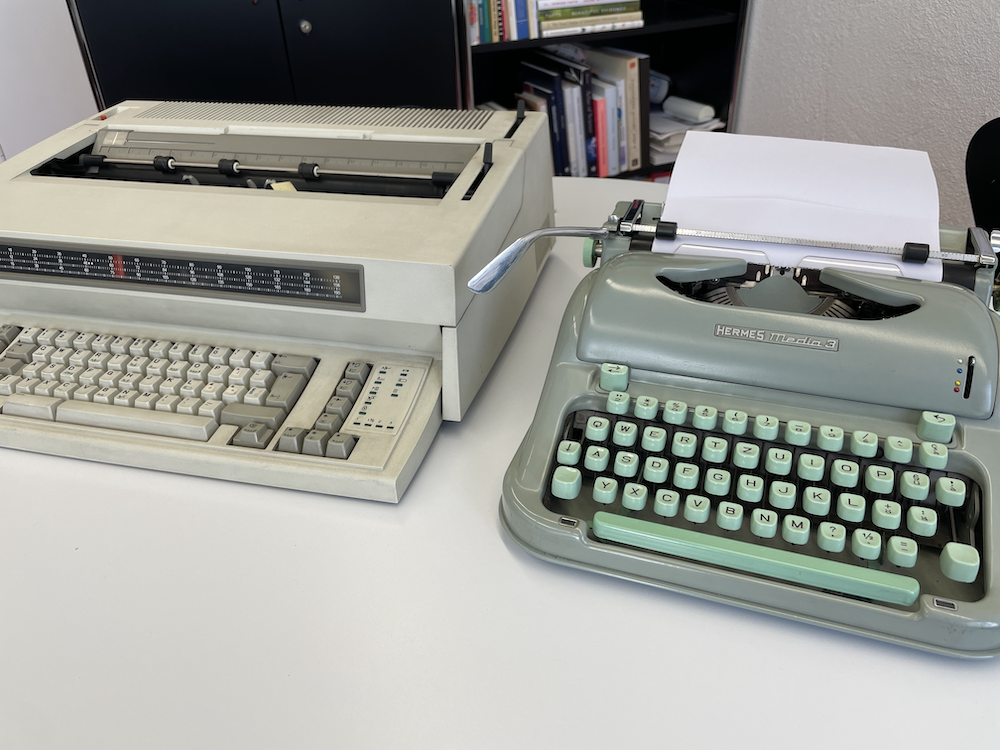

These three types of writing have historically also shaped the development of machines and programs: The mechanical typewriter was replaced by the electric one, which, thanks to memory, allowed the first distance between typing and printing: before outputting the line on the paper, the input could be checked and corrected if necessary.

The first computers were programmed by plugging plugs or later punching punched cards: Planning and writing the program happened outside the computer, which was impractical in the long run: so the idea was born to give computers an operating system and software based on it, editors, with which the program could be created directly on the computer, its execution could be monitored and the program could be corrected immediately.

Only after that the idea was born to use such editors for writing any other text as well, thus taking advantage of the live manipulation of a text that became possible with the computer.

These two strands of development – programming (writing for the computer) and writing (for us) – led to two different types of word processing software:

In both, both can be done. However, an editor is characterized by the fact that its file format is a so-called txt format, so it can be opened in any editor without any problems. In addition it offers usually so-called “ syntax highlighting „, draws thus structure elements and instructions of a programming language colored and offers different assistance for the writing of the program code. In addition printing plays a subordinate role: The length of the text is not oriented to pages, but to lines. If a logical hierarchy can be derived from the text or program structure, this has an effect on the various visualizations of the text: Lines that are hierarchically subordinate to another line can, for example, be folded in and out.

Text processing is quite different: it still shows traces of the mechanical typewriter: there are margins to be set, tabs and a basic orientation to pages. However, they also reflect the needs of typesetting and printing: markups play a big role: bold, italic, underlined. Fonts and font sizes can be selected and the text can be designed. Most word processors follow the WYSIWYG principle: what you see is what you get, the representation on the screen corresponds to the print. Bravo, released by Xerox Parc in 1974, is considered the first word processor with a graphical user interface and WYSIWYG.

However, the word processor offers even more: format templates. A concept that has more in common with the editor. These are logical rather than graphical markups: 1st order titles, 2nd order titles, emphasis, etc. are assigned to paragraphs or strings. The graphical representation of these categories can be changed at will afterwards, and it is easy to automatically extract titles and create a table of contents from a text formatted in this way.

Most word processors, however, allow a wild mix of different principles: Paragraphs can be formatted using style sheets, but at the same time they can be formatted graphically, so that in the worst case it is not visible whether there is logical information behind the formatting or not.

Word processors are thus on the one hand offspring of editors and contain until today certain elements of them (logical markup, search and replace function, display in draft mode without pagination), on the other hand they are simulations of typewriters and typesetting and layout systems.

Gradually, however, supports have been added:

- Spelling and grammar check

- Dictionaries and thesauri

- Automatic index creation (tables of contents, indexes)

- Bibliography software embedded in word processing to facilitate citation

- Layout aids in the form of styles that can be applied to a document and also changed again

- Integration of content from other programs such as a chart from a spreadsheet with live updates

- etc.

Not all users use these supports. I observe among my students and colleagues in German studies, for example, that the use of bibliography software such as Zotero is anything but common. And this probably has to do with the fact that text processing today is still based on two completely different cultures: Either the typewriter culture or the programming culture. The latter wants to delegate as many routine tasks as possible to the machine and accepts to record data in a structured way (e.g. to use a bibliography software). The former does not even consider that the machine could take over a lot of routine tasks and finds it too costly to make an initial effort in order to be able to work more efficiently afterwards.

Now, however, there are new possibilities with the possibilities of modern „artificial intelligence“. These systems are based on language models that have learned to reproduce knowledge based on very large amounts of data and apply it to new data. One example is the latest OpenAI model, which can be used to communicate via the ChatGPT interface.

Amazing things are possible with this. For example, I can ask:

Question: How did typing change compared to today? What were the key inventions that changed writing?

Answer: With the invention of the typewriter in the 19th century, writing texts became much faster and easier. The ability to type letters on a sheet of paper using keys instead of laboriously writing by hand made it possible to compose written documents more quickly. The typewriter was also an important advance in terms of text legibility, as the use of the machine made writing clearer and more distinct compared to handwriting.

Another important invention in terms of text writing was the computer. The use of computers and writing programs made texts even faster and easier to compose. The ability to edit and correct texts was also greatly improved.

Overall, text writing has changed significantly with the use of typewriters and computers and is now a much faster and easier process than it was decades ago.

ChatGPT, December 6, 2022 dialogue (translated, original in German)

The dialog can be continued and in doing so, the system can access the past sequences.

However, the following is also possible:

Question: I want a script that reads in a large corpus as a text file, computes a model with word2vec, and then clusters it.

Answer: Here is a simple example of a Python script that reads in a large corpus as a text file, computes it using Gensim’s word2vec model, and then clusters it.

import gensim from gensim.models import Word2Vec from gensim.utils import simple_preprocess # Einlesen des Korpus als Textdatei with open("korpus.txt", "r") as f: text = f.read() # Tokenisieren des Korpus (Aufteilen des Textes in einzelne Wörter oder "Tokens") tokens = simple_preprocess(text) # Berechnen des Word2Vec-Modells model = Word2Vec(tokens, size=100, window=5, min_count=5, workers=4) # Berechnen der Word2Vec-Vektoren für die Wörter im Korpus vectors = [model[word] for word in model.wv.vocab] # Clustern der Word2Vec-Vektoren mithilfe des K-Means-Algorithmus kmeans = KMeans(n_clusters=10) kmeans.fit(vectors) # Ausgabe der zugewiesenen Cluster für jedes Wort im Korpus clusters = kmeans.predict(vectors) for i, word in enumerate(model.wv.vocab): print(f"{word}: {clusters[i]}")The script computes the Word2Vec model for the given corpus and then clusters the vectors of the words using the K-Means algorithm. The assigned clusters for each word are then output.

Please note that this example is very simplistic and may not be suitable for all use cases. For more accurate and reliable computation of Word2Vec models and clusters, further steps such as fine-tuning the model parameters or using other clustering algorithms might be required. It would always be advisable to look into the matter in detail and seek professional help if necessary.

ChatGPT, December 7, 2022 dialogue (translated, original in German)

Many experiments with ChatGPT test whether the language model can produce text that is meaningful and interesting in terms of content. This works to a certain extent, but is primarily a rather boring paraphrasing of existing knowledge. However, it is much more interesting to use it to bridge the gap between typewriter culture and programming culture. Possible tasks that could be outsourced to an AI are for example (* = already possible with ChatGPT):

- Please summarize for me the state of research on topic XY over the last five years.

- Create an abstract of my text. *

- Give me definitions of XY in the literature. (*)

- Please paraphrase this table of statistical values in three sentences. *

- Describe what in the discipline is meant by XY. (*)

In addition to such tasks, which are more content-related, there are also many more technical tasks:

- I have here a list of bibliographic citations of papers in an unstructured format: please convert it to a structured format so that I can easily import it into my bibliography software. *

- Please check the citations for correctness and bibliographize them properly.

- Object language should be italic, I forgot to use an appropriate style sheet. Please create a style „object language“, find all passages with object language and assign this style. Define the style with font „italic“.

- Journal XY always wants a period after the author names and the year at the end in the bibliography, please change this accordingly. (*)

- Create a Python script to convert these value tables into a chart. *

Of course, the last task could be easily accomplished using bibliography software, but it is even more convenient this way.

The research process could also be supported by AI:

- Please check how the use of the term „Heimat“ has changed in Swiss media over the last five years. I would like to have relative frequencies aggregated by month (per million words) and a table of sources used.

- Create a script to convert these text files, which all have pattern XY, into XML documents, so that I can process them afterwards with software Z. *

- Create a script to process these manuscripts using the API of Transkribus with the model XY.

Implications

Writing will change greatly with the use of AI – but these changes are in a long tradition of machine support for writing from paper to screen and from pen to typewriter to computer. At last, however, there is now an opportunity for typewriter culture and programming culture to converge on the computer.

However, with consequences:

- What skills are needed to use AI? After all, it must be possible to set the task sensibly and assess the result correctly – it must be adapted, corrected and extended.

- Many activities of scientific work that were previously considered important will become unimportant: creating the bibliography and citing according to a certain style, elaborating the state of research, identifying and paraphrasing much-cited literature.

- It follows inevitably that in teaching new evaluation criteria must be found for the evaluation of qualification papers and of scientific work. It makes no sense to insist that the bibliography is complete or formatted according to scheme X – that is a task we can delegate to the computer. The paraphrasing of a research status is also rather uninteresting – but its classification and the conclusions to be drawn from it are of course very much so.

- What is urgently needed, however, is the promotion of data literacy and AI literacy: the reading and interpretation of data, a profound understanding of digitality and artificial intelligence – its opportunities, limitations and dangers.

- And most importantly, AI is extremely attractive, but is increasingly in the hands of commercial companies. It is becoming increasingly difficult for universities to keep up technologically because, on the one hand, a lot of money is needed to do so, and on the other hand, universities tend to adhere to legal and data protection barriers and therefore cannot even access a lot of data. Commercial companies, however, have the money to easily bear the risk of legal disputes. It is therefore difficult to distribute AI-supported software as open source software.

But that’s not all. In the next part, I will address why the question about publication languages in science should actually be over, provided we develop a better way of dealing with machine translation.

Context

I’m currently teaching a seminar on „Stenography, Typewriters, Computers, Virtual Assistants: the Communication History of Writing Cultures“, in the context of which we are also blogging and having lively exchanges – thanks to the students! Through this, I became heavily involved with the media and cultural conditions and changes of writing with machines. Media scholar Till Heilmann, author of the book „Textverarbeitung: Eine Mediengeschichte des Computers als Schreibmaschine“ (Text Processing: A Media History of the Computer as Typewriter), visited our seminar, as did linguist Andi Gredig; he authored the book „Schreiben mit der Hand. Begriffe – Diskurs – Praktiken“ (Writing by Hand. Concepts – Discourse – Practices). I am also in lively exchange on medial conditions of writing and on machine processing and analysis of text with Joachim Scharloth, Philippe Wampfler, Maaike Kellenberger, Julia Krasselt, and many others. Thank you.

Pingback: How we (could) write scientific texts in the future – part 2 | Sprechtakel